The Foundation has funded a couple of projects to investigate the feasibility of developing a passive measure of student engagement, using galvanic skin response (GSR).

The ridicule comes from an assumption that it won't work.

GSR basically measures how sweaty you are. Two leads are placed on the skin. One emits a very very mild charge. The other measures the charge. The more sweat on your skin, the better it conducts the charge, so the better the second lead will pick up the charge.

Who cares how sweaty your skin is?

Sweat--as well as heart rate, respiration rate and a host of other physiological signs controlled by the peripheral nervous system--vary with your emotional state.

Can you tell whether a student is paying attention from these data?

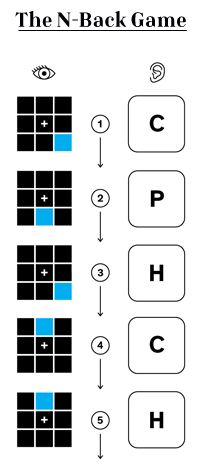

It's at least plausible that it could be made to work. There has long been controversy over how separable different emotional states are, based on these sorts of metrics. It strikes me as a tough problem, and we're clearly not there yet, but the idea is far from kooky, and indeed, the people who have been arguing its possible have been making some progress--this lab group says they've successfully distinguished engagement, relaxation and stress. (Admittedly, they gathered a lot more data than just GSR and one measure they collected was EEG, a measure of the central, not peripheral, nervous system.)

The grave concern springs from the possible use to which the device would be put.

A Gates Foundation spokeswoman says the plan is that a teacher would be able to tell, in real time, whether students are paying attention in class. (Earlier the Foundation website indicated that the grant was part of a program meant to evaluate teachers, but that was apparently an error.)

Some have objected that such measurement would be insulting to teachers. After all, can't teachers tell when their students are engaged, or bored, or frustrated, etc.?

I'm sure some can, but not all of them. And it's a good bet that beginning teachers can't make these judgements as accurately as their more experienced colleagues, and beginners are just the ones who need this feedback. Presumably the information provided by the system would be redundant to teachers who can read it by their students faces and body language, and these teachers will simply ignore it.

I would hope that classroom use would be optional--GSR bracelets would enter classrooms only if teachers requested them.

Of greater concern to me are the rights of the students. Passive reading of physiological data without consent feels like an invasion of privacy. Parental consent ought to be obligatory. Then too, what about HIPAA? What is the procedure if a system that measures heartbeat detects an irregularity?

These two concerns--the effect on teachers and the effect on students--strike me as serious, and people with more experience than I have in ethics and in the law will need to think them through with great care.

But I still think the project is a terrific idea, for two reasons, neither of which has received much attention in all the uproar.

First, even if the devices were never used in classrooms, researchers could put them to good use.

I sat in at a meeting a few years ago of researchers considering a grant submission (not to the Gates Foundation) on this precise idea--using peripheral nervous system data as an on-line measure of engagement. (The science involved here is not really in my area of expertise, and had no idea why I was asked to be at the meeting, but that seems to be true of about two-thirds of the meetings I attend.) Our thought was that the device would be used by researchers, not teachers and administrators.

Researchers would love a good measure of engagement because the proponents of new materials or methods so often claim "increased engagement" as a benefit. But how are researchers supposed to know whether or not the claim is true? Teacher or student judgements of engagement are subject to memory loss and to well-known biases.

In addition, I see potentially great value for parents and teachers of kids with disabilities. For example, have a look at these two pictures.

Esprit can never tell me that she's engaged either with words or signs. But I'm comfortable concluding that she is engaged at moments like that captured in the top photo--she's turning the book over in her hands and staring at it intently.

In the photo at the bottom, even I, her dad, am unsure of what's on her mind. (She looks sleepy, but isn't--ptosis, or drooping upper eyelids, is part of the profile). If Esprit wore this expression while gazing towards a video for example, I wouldn't be sure whether she was engaged by the video or was spacing out.

Are there moments that I would slap a bracelet on her if I thought it could measure whether or not she was engaged?

You bet your sweet bippy there are.

I'm not the first to think of using physiologic data to measure engagement in people with disabilities that make it hard to make their interests known. In this article, researchers sought to reduce the communication barriers that exclude children with disabilities from social activities; the kids might be present, but because of their difficulties describing or showing their thoughts, they cannot fully participate in the group. Researchers reported some success in distinguishing engaged from disengaged states of mind from measures of blood volume pulse, GSR, skin temperature, and respiration in nine young adults with muscular dystrophy or cerebral palsy.

I respect the concerns of those who see the potential for abuse in the passive measurement of physiological data. At the same time, I see the potential for real benefit in such a system, wisely deployed.

When we see the potential for abuse, let's quash that possibility, but let's not let it blind us to the possibility of the good that might be done.

And finally, because Esprit didn't look very cute in the pictures above, I end with this picture.

RSS Feed

RSS Feed