Claims of scientific support (e.g., here) have been controversial (see here), and part of the problem is that many of the studies, even ones claiming "gold standard" methodologies have not been conducted in the ideal way. This controversy usually arises after the fact; researchers claim that brain training works, and critics point out flaws in the study design.

A new study has examined more directly the possibility that brain training gains are due to placebo effects, and it indicates that's likely.

Cyrus Foroughi and his colleagues at George Mason university set out to test the possibility that knowing you are in a study that purportedly improves intelligence will impact your performance on the relevant tests. The independent variable in their study was method of recruitment via an advertising flyer: either you knew you were signing up for brain training or you didn't.

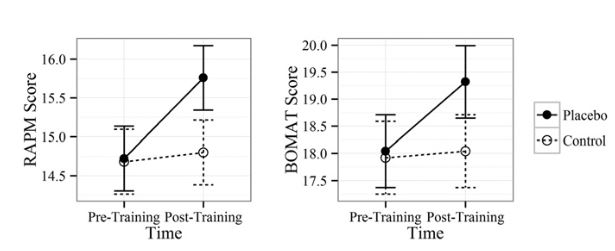

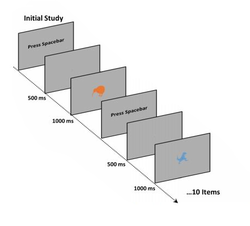

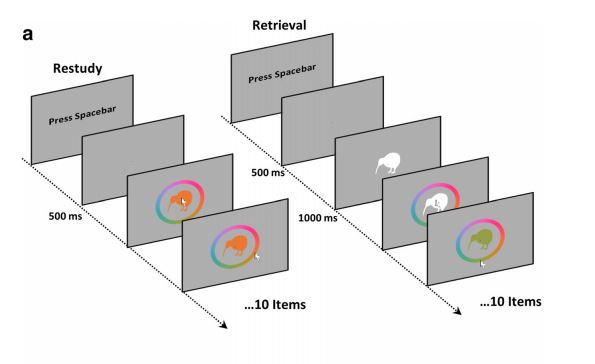

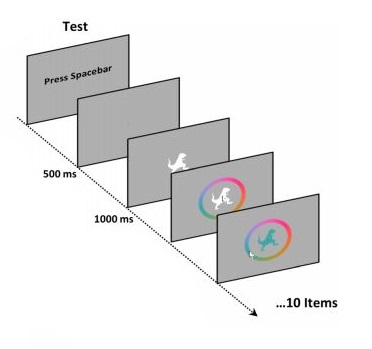

All participants went through the same experimental procedure. They took two standard fluid intelligence tests. Then they participated in one hour of working memory training, the oft-used N-back task. The final outcome measures--the fluid intelligence tests--took place the following day.

Even advocates of brain training would agree that a single hour of practice is not enough to produce any measurable effect. Yet subjects who thought brain training would make them smarter improved. Control subjects did not.

Most published experiments of brain training had not reported whether subjects were recruited in a way that made the purpose plain. Foroughi and his colleagues contacted the researchers behind 19 published studies that were included in a meta-analysis and found that in 17 of these subjects knew the purpose of the study.

It should be noted that this new experiment does not show conclusively that brain training cannot work. It shows placebo effects appear to be very easy to obtain in this type of research. I dare say it's even a more dramatic problem than critics had appreciated, and more than ever, the onus is on advocates of brain training to show their methods work.

RSS Feed

RSS Feed