I tasted that pleasure this week, courtesy of a paper by Walter Boot and colleagues (2013).

The paper concerned the adequacy of control groups in intervention studies--interventions like (but not limited to) "brain games" meant to improve cognition, and the playing of video games, thought to improve certain aspects of perception and attention.

Control group

Control group The performance of the control group is to be compared to the performance of the experimental group, which should allow an assessment of the impact of the critical variable on the outcome measure.

Now consider video gaming or brain training. Subjects in an experiment might very well guess the suspected relationship between the critical variable and the outcome. They have an expectation as to what is likely to happen. If they do, then there might be a placebo effect--people perform better on the outcome test simply because they expect that the training will help just as some people feel less pain when given a placebo that they believe is a analgesic.

Active control group

Active control group The purpose of the active control is that it is supposed to make expectations equivalent in the two groups. Boot et al.'s simple and valid point is that it probably doesn't do that. People don't believe playing Sims will improve attention.

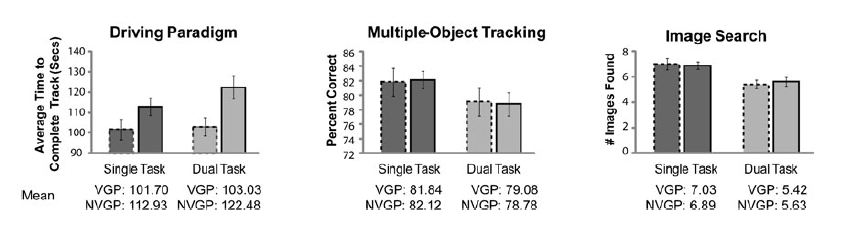

The experimenters gathered some data on this point. They had subjects watch a brief video demonstrating what an action video game was like or what the active control game was like. Then they showed them videos of the measures of attention and perception that are often used in these experiments. And they asked subjects "if you played the video game a lot, do you think it would influence how well you would do on those other tasks?"

Out of control group

Out of control group In other words, subjects see the underlying similarities between games and the outcome measures, and they figure that higher similarity between them means a greater likelihood of transfer.

As the authors note, this problem is not limited to the video gaming literature; the need for an active control that deals with subject expectations also applies to the brain training literature.

More broadly, it applies to studies of classroom interventions. Many of these studies don't use active controls at all. The control is business-as-usual.

In that case, I suspect you have double the problem. You not only have the placebo effect affecting students, you also have one set of teachers asked to do something new, and another set teaching as they typically do. It seems at least plausible that the former will be extra reflective on their practice--they would almost have to be--and that alone might lead to improved student performance.

It's hard to say how big these placebo effects might be, but this is something to watch for when you read research in the future.

Reference

Boot, W. R., Simons, D. J., Stothart, C. & Stutts, C. (2013). The pervasive problems with placebos in psychology: Why active control groups are not sufficient to rule out placebo effects. Perspectives in Psychological Science, 8, 445-454.

RSS Feed

RSS Feed