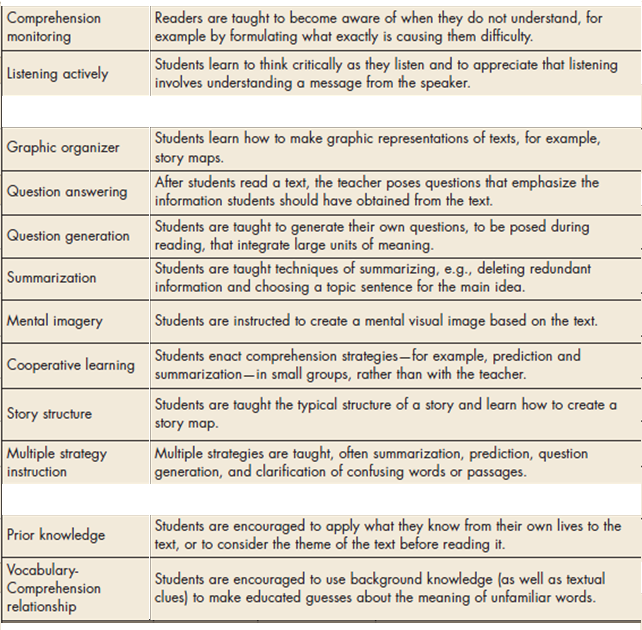

I’ve written about them before (article here). The next paragraph provides just a brief summary of what I’ve written. The figure below shows the strategies themselves, if you’re not familiar with them (click the image for a larger version).

The short version of my conclusion is that they don’t really improve the comprehension process per se. Rather, they help kids who have become good decoders to realize that the point of reading is communication. And that if they can successfully say written words aloud but cannot understand what they’ve read, that’s a problem. Evidence for this point of view include data that kids don’t benefit much from reading comprehension instruction after 7th grade, likely because they’ve all drawn this conclusion, and that increased practice with reading comprehension strategies doesn’t bring any improved benefit. It’s a one-time increment.

Whatever the proportion of time, much of it is wasted, at least if educators think it’s improving comprehension, because the one-time boost to comprehension can be had for perhaps five or ten sessions of 20 or 30 minutes each.

Some reading comprehension strategies might be useful for other reasons. For example, a teacher might want her class create a graphic organizer as a way of understanding how an author builds narrative arc

The wasted time obviously represents a significant opportunity cost. But has anyone ever considered that implementing these strategies make reading REALLY BORING? Everyone agrees that one of our long-term goals in reading instruction is to get kids to love reading. We hope that more kids will spend more time reading and less time playing video games, watching TV, etc.

To me, reading comprehension strategies seem to take a process that could bring joy, and turn it into work.

RSS Feed

RSS Feed