A piece appeared in the New York Post on August 27 with the headline "It's digital heroin: How screens turn kids into psychotic junkies."

Even allowing for the fact that authors don't write headlines, this article is hyperbolic and poorly argued. I said as much on Twitter and my Facebook page, and several people asked me to elaborate. So....

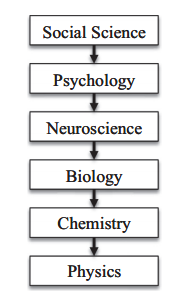

First, to say "Recent brain imaging research is showing that they [games] affect the brain’s frontal cortex — which controls executive functioning, including impulse control — in exactly the same way that cocaine does," is transparently false. Engaging in a behavior cannot affect your brain in exactly the same way a psychoactive drug does. Saying it does is a scare tactic.

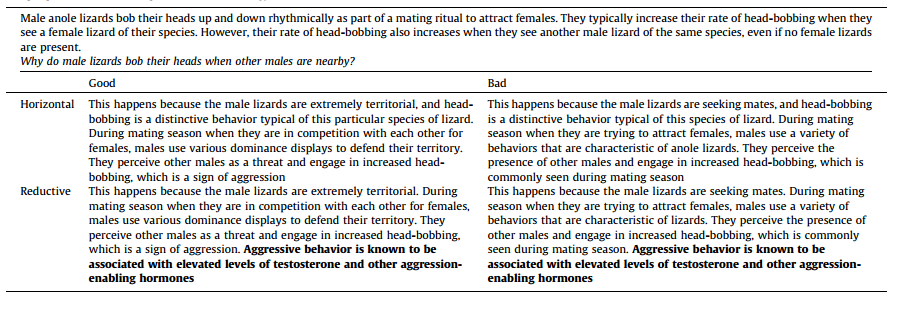

Lots of activities give people pleasure and it's sensible that these activities show some physiological similarities, given the similarity in experience. But if you want to suggest that the analogy (games and cocaine both change dopamine levels, therefore they are similar in other ways) extends to other characteristics, you need direct evidence for those other characteristics. Absent that, it's as though you buy a pet bunny and I say "My God, bunnies have four legs. Don't you realize TIGERS have four legs? You've brought a tiny tiger into your home!"

On the addiction question: The American Psychiatric Association has considered including Internet Addiction in the DSM V and has elected not too, though it's still being considered. Research is ongoing, and technology changes quickly, so it's wouldn't make sense to close the book on the issue.

To qualify as an addiction, you need more than the fact that the person likes it a lot and does it a lot. Addictions are usually characterized by:

•Tolerance--increased amount of the behavior becomes necessary

•Withdrawal symptoms if behavior is stopped

•Person wants to quit but can’t

•Lots of time spent on the behavior

•Engage in the behavior even though it’s counterproductive

The last two of these seem a good fit for kids who spend a huge amount of time on gaming or other digital technologies. The others, the less obviously so.

Let me be plain: I have plenty of concerns regarding the content and volume of children's time spent with digital technologies. I've written about this issue elsewhere, and my own children who are still at home (age 13, 11, and 9) face stringent restrictions on both.

But thinking through a complex issue like the social, emotional, cognitive, and motivational consequences of ominipresent screens in daily life requires clear-headed thinking, not melodramatic claims based on thin analogies.

RSS Feed

RSS Feed