Today, I offer a plea and a suggestion for making education blogs less boring, specifically on the subject of standardized testing.

I begin with two Propositions about human behavior

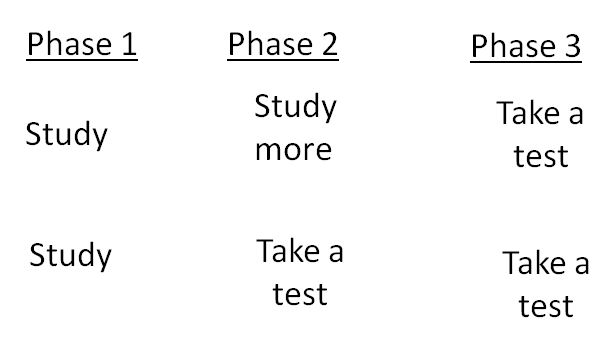

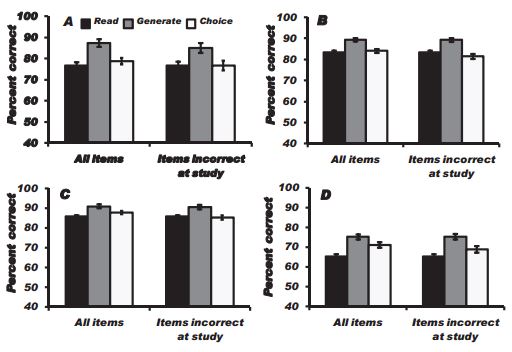

- Proposition 1: If you provide incentives for X, people are more likely to do what they think will help them get X. They may even attempt to get X through means that are counterproductive.

- Proposition 2: If we use procedure Z to change Y in order to make it more like Y’, we need to measure Y in order to know whether procedure Z is working. We have to be able to differentiate Y and Y’.

On Proposition 1: Standardized tests typically gain validity by showing that scores are associated with some outcome you care about. You seldom care about the items on the test specifically. You care about what they signify. Sometimes tests have face validity, meaning test items look like they test what they are meant to test—a purported history test asks questions about history, for example. Often they don’t, but the test is still valid. A well-constructed vocabulary test can give you a pretty good idea of someone’s IQ, for example.

Just as body temperature is a reliable, partial indicator of certain types of disease, a test score is a reliable, partial indicator of certain types of school outcomes. But in most circumstances your primary goal is not a normal body temperature; it’s that the body is healthy, in which case body temperature will be normal as a natural consequence of the healthy state.

On Proposition 2: Some form of assessment is necessary. Without it, you have no idea how things are going. You won’t find many defenders of No Child Left Behind, but one thing we should remember is that the required testing did expose a number of schools—mostly ones serving disadvantaged children—where students were performing very poorly. And assessments have to be meaningful, i.e., reliable and valid. Portfolio assessments, for example, sound nice, but there are terrible problems with reliability and validity. It’s very difficult to get them to do what they are meant to do.

So here’s my plea. Admit that both Proposition 1 and Proposition 2 are true, and apply to testing children in schools.

People who are angry about the unintended social consequences of standardized testing have a legitimate point. They are not all apologists for lazy teachers or advocates of the status quo. Calling for high-stakes testing while taking no account of these social consequences, offering no solution to the problem . . . that's boring.

People who insist on standardized assessments have a legitimate point. They are not all corporate stooges and teacher-haters. Deriding “bubble sheet” testing while offering no viable alternative method of assessment . . . that's boring.

Naturally, the real goal is not to entertain me with more interesting blog posts. The goal is to move the conversation forward. The landscape will likely change consequentially in the next two years. This is the time to have substantive conversations.

RSS Feed

RSS Feed