I (like everyone else) am always eager for documents that clearly summarize a large, complex literature. One such literature of urgent interest is the role of self-regulation in academic success. A new working paper from Transforming Education (full disclosure: I’m on their advisory board) does a great job of highlighting the important findings regarding non-cognitive skills, a not-very-precise term originating in economics that refers mostly to self-control and social competence.

Part of the fun and ongoing fascination of science of science is "the effect that ought not to work, yet does."

The impact of values of affirmation on academic performance is such an effect. Values-affirmation "undoes" the effect of stereotype threat (also called identity threat). Stereotype threat occurs when a person is concerned about confirming a negative stereotype about his or her group. In other words a boy is so consumed with thinking "Everyone expects me to do poorly on this test because I'm African-American" that his performance actually is compromised (see Walton & Spencer, 2009 for a review). One way to combat stereotype threat is to give the student better resources to deal with the threat--make the student feel more confident, more able to control the things that matter in his or her life. That's where values affirmation comes in. In this procedure, students are provided a list of values (e.g., relationships with family members, being good at art) and are asked to pick three that are most important to them and to write about why they are so important. In the control condition, students pick three values they imagine might be important to someone else. Randomized control trials show that this brief intervention boosts school grades (e.g., Cohen et al, 2006). Why? One theory is that values affirmation gives students a greater sense of belonging, of being more connected to other people. (The importance of social connection is an emerging theme in other research areas. For example, you may have heard about the studies showing that people are less anxious when anticipating a painful electric shock if they are holding the hand of a friend or loved one.) A new study (Shnabel et al, 2013) directly tested the idea that writing about social belonging might be a vital element in making values affirmation work. In Experiment 1 they tested 169 Black and 186 White seventh graders in a correlational study. They did the values-affirmation writing exercise, as described above. The dependent measure was change in GPA (pre-intervention vs. post-intervention.) The experimetners found that writing about social belonging in the writing assignment was associated with a greater increase in GPA for Black students (but not for White students, indicating that the effect is due to reduction in stereotype threat.) In Experiment 2, they used an experimental design, testing 62 male and 55 female college undergraduates on a standardized math test. Some were specifically told to write about social belonging and others were given standard affirmation writing instructions. Female students in the former group outscored those in the latter group. (And there was no effect for male students.) The brevity of the intervention relative to the apparent duration of the effect still surprise me. But this new study gives some insight into why it works in the first place. References: Cohen, G. L., Garcia, J., Apfel, N., & Master, A. (2006). Reducing the racial achievement gap: A social-psychological interven-tion. Science, 313, 1307-1310. Shnabel, N., Purdie-Vaughns, V., Cook, J. E., Garcia, J., & Cohen, G. L. (2013). Demystifying values-affirmation interventions: Writing about social belonging is a key to buffering against identity threat. Personality and Social Psychology Bulletin, Walton, G. M., & Spencer, S. J. (2009). Latent ability: Grades and test scores systematically underestimate the intellectual ability of negatively stereotyped students. Psychological Science, 20, 1132-1139. One of the great intellectual pleasures is to hear an idea that not only seems right, but that strikes you as so terribly obvious (now that you've heard it) you're in disbelief that no one has ever made the point before. I tasted that pleasure this week, courtesy of a paper by Walter Boot and colleagues (2013). The paper concerned the adequacy of control groups in intervention studies--interventions like (but not limited to) "brain games" meant to improve cognition, and the playing of video games, thought to improve certain aspects of perception and attention.  Control group Control group To appreciate the point made in this paper, consider what a control group is supposed to be and do. It is supposed to be a group of subjects as similar to the experimental group as possible, except for the critical variable under study. The performance of the control group is to be compared to the performance of the experimental group, which should allow an assessment of the impact of the critical variable on the outcome measure. Now consider video gaming or brain training. Subjects in an experiment might very well guess the suspected relationship between the critical variable and the outcome. They have an expectation as to what is likely to happen. If they do, then there might be a placebo effect--people perform better on the outcome test simply because they expect that the training will help just as some people feel less pain when given a placebo that they believe is a analgesic.  Active control group Active control group The standard way to deal with that problem is the use an "active control." That means that the control group doesn't do nothing--they do something, but it's something that the experimenter does not believe will affect the outcome variable. So in some experiments testing the impact of action video games on attention and perception, the active control plays slow-paced video games like Tetris or Sims. The purpose of the active control is that it is supposed to make expectations equivalent in the two groups. Boot et al.'s simple and valid point is that it probably doesn't do that. People don't believe playing Sims will improve attention. The experimenters gathered some data on this point. They had subjects watch a brief video demonstrating what an action video game was like or what the active control game was like. Then they showed them videos of the measures of attention and perception that are often used in these experiments. And they asked subjects "if you played the video game a lot, do you think it would influence how well you would do on those other tasks?"  Out of control group Out of control group And sure enough, people think that action video games will help on measures of attention and perception. Importantly, they don't think that they would have an impact on a measure like story recall. And subjects who saw the game Tetris were less likely to think it would help the perception measures, but were more likely to say it would help with mental rotation. In other words, subjects see the underlying similarities between games and the outcome measures, and they figure that higher similarity between them means a greater likelihood of transfer. As the authors note, this problem is not limited to the video gaming literature; the need for an active control that deals with subject expectations also applies to the brain training literature. More broadly, it applies to studies of classroom interventions. Many of these studies don't use active controls at all. The control is business-as-usual. In that case, I suspect you have double the problem. You not only have the placebo effect affecting students, you also have one set of teachers asked to do something new, and another set teaching as they typically do. It seems at least plausible that the former will be extra reflective on their practice--they would almost have to be--and that alone might lead to improved student performance. It's hard to say how big these placebo effects might be, but this is something to watch for when you read research in the future. Reference Boot, W. R., Simons, D. J., Stothart, C. & Stutts, C. (2013). The pervasive problems with placebos in psychology: Why active control groups are not sufficient to rule out placebo effects. Perspectives in Psychological Science, 8, 445-454. If the title of this blog struck you as brash, I came by it honestly: it's the title of a terrific new paper by three NYU researchers (Protzko, Aronson & Blair, 2013). The authors sought to review all interventions meant to boost intelligence, and they cast a wide net, seeking any intervention for typically-developing children from birth to kindergarten age that used a standard IQ test as the outcome measure, and that was evaluated in a random control trial (RCT) experiment. A feature of the paper I especially like is that none of the authors publish in the exact areas they review. Blair mostly studies self-regulation, and Aronson, gaps due to race, ethnicity or gender. (Protzko is a graduate student studying with Aronson.) So the paper is written by people with a lot of expertise, but who don't begin their review with a position they are trying to defend. They don't much care which way the data come out. So what did they find? The paper is well worth reading in its entirety--they review a lot in just 15 pages--but there are four marquee findings.  First, the authors conclude that infant formula supplemented with long chain polyunsaturated fatty acids boosts intelligence by about 3.5 points, compared to formula without. They conclude that the same boost is observed if pregnant mothers receive the supplement. There are not sufficient data to conclude that other supplements--riboflavin, thiamine, niacin, zinc, and B-complex vitamins--have much impact, although the authors suggest (with extreme caution) that B-complex vitamins may prove helpful.  Second, interactive reading with a child raises IQ by about 6 points. The interactive aspect is key; interventions that simply encouraged reading or provided books had little impact. Effective interventions provided information about how to read to children: asking open-ended questions, answering questions children posed, following children's interests, and so on.  Third, the authors report that sending a child to preschool raises his or her IQ by a little more than 4 points. Preschools that include a specific language development component raise IQ scores by more than 7 points. There were not enough studies to differentiate what made some preschools more effective than others.  Fourth, the authors report on interventions that they describe as "intensive," meaning they involved more than preschool alone. The researchers sought to significantly alter the child's environment to make it more educationally enriching. All of these studies involved low-SES children (following the well-established finding that low-SES kids have lower IQs than their better-off counterparts due to differences in opportunity. I review that literature here.) Such interventions led to a 4 point IQ gain, and a 7 point gain if the intervention included a center-based component. The authors note the interventions have too many features to enable them to pinpoint the cause, but they suggest that the data are consistent with the hypothesis that the cognitive complexity of the environment may be critical. They were able to confidently conclude (to their and my surprise) that earlier interventions helped no more than those starting later. Those are the four interventions with the best track record. (Some others fared less well. Training working memory in young children "has yielded disappointing results." ) The data are mostly unsurprising, but I still find the article a valuable contribution. A reliable, easy-to-undertand review on an important topic. Even better, this looks like the beginning of what the authors hope will be a longer-term effort they are calling the Database on Raising Intelligence--a compendium of RCTs based on interventions meant to boost IQ. That may not be everything we need to know about how to raise kids, but it's a darn important piece, and such a Database will be a welcome tool. An experiment is a question which science poses to Nature, and a measurement is the recording of nature’s answer. --Max Planck You can't do science without measurement. That blunt fact might give pause when people emphasize non-cognitive factors in student success and in efforts to boost student success. "Non-cognitive factors" is a misleading but entrenched catch-all term for factors such as motivation, grit, self-regulation, social skills. . . in short, mental constructs that we think contribute to student success, but that don't contribute directly to the sorts of academic outcomes we measure, in the way that, say, vocabulary or working memory do.  Non-cognitive factors have become hip. (Honestly, if I hear about the Marshmallow Study just one more time, I'm going to become seriously dysregulated) and there are plenty of data to show that researchers are on to something important. But are they on to anything that that educators are likely to be able to use in the next few years? Or are we going to be defeated by the measurement problem ? There is a problem, there's little doubt. A term like "self-regulation" is used in different senses: the ability to maintain attention in the face of distraction, the inhibition of learned or automatic responses, or the quelching of emotional responses. The relation among them is not clear. Further, these might be measured by self-ratings, teacher ratings, or various behavioral tasks.  But surprisingly enough, different measures do correlate, indicating that there is a shared core construct (Sitzman & Ely, 2011). And Angela Duckworth (Duckworth & Quinn, 2009) has made headway in developing a standard measure of grit (distinguished from self-control by its emphasis on the pursuit of a long-term goal). So the measurement problem in non-cognitive factors shouldn't be overstated. We're not at ground-zero on the problem. At the same time, we're far from agreed-upon measures. Just how big a problem is that? It depends on what you want to do. If you want to do science, it's not a problem at all. It's the normal situation. That may seem odd: how can we study self-regulation if we don't have a clear idea of what it is? Crisp definitions of constructs and taxonomies of how they relate are not prerequisites for doing science. They are the outcome of doing science. We fumble along with provisional definitions and refine them as we go along. The problem of measurement seems more troubling for education interventions. Suppose I'm trying to improve student achievement by increasing students' resilience in the face of failure. My intervention is to have preschool teachers model a resilient attitude toward failure and to talk about failure as a learning experience. Don't I need to be able to measure student resilience in order to evaluate whether my intervention works? Ideally, yes, but that lack may not be an experimental deal-breaker. My real interest is student outcomes like grades, attendance, dropout, completion of assignments, class participation and so on. There is no reason not to measure these as my outcome variables. The disadvantage is that there are surely many factors that contribute to each outcome, not just resilience. So there will be more noise in my outcome measure and consequently I'll be more likely to conclude that my intervention does nothing when in fact it's helping. The advantage is that I'm measuring the outcome I actually care about. Indeed, there would not be much point in crowing about my ability to improve my psychometrically sound measure of resilience if such improvement meant nothing to education. There is a history of this approach in education. It was certainly possible to develop and test reading instruction programs before we understood and could measure important aspects of reading such as phonemic awareness. In fact, our understanding of pre-literacy skills has been shaped not only by basic research, but by the success and failure of preschool interventions. The relationship between basic science and practical applications runs both ways. So although the measurement problem is a troubling obstacle, it's neither atypical nor final. References Duckworth, A. L., & Quinn, P. D. (2009). Development and validation of the Short Grit Scale (GRIT–S). Journal of Personality Assessment, 91, 166-174. Sitzmann, T, & Ely, K. (2011). A meta-analysis of self-regulated learning in work-related training and educational attainment: What we know and where we need to go. Psychological Bulletin, 137, 421-442. A lot of data from the last couple of decades shows a strong association between executive functions (the ability to inhibit impulses, to direct attention, and to use working memory) and positive outcomes in school and out of school (see review here). Kids with stronger executive functions get better grades, are more likely to thrive in their careers, are less likely to get in trouble with the law, and so forth. Although the relationship is correlational and not known to be causal, understandably researchers have wanted to know whether there is a way to boost executive function in kids.

Tools of the Mind (Bedrova & Leong, 2007) looked promising. It's a full preschool curriculum consisting of some 60 activities, inspired by the work of psychologist Lev Vygotsky. Many of the activities call for the exercise of executive functions through play. For example, when engaged in dramatic pretend play, children must use working memory to keep in mind the roles of other characters and suppress impulses in order to maintain their own character identity. (See Diamond & Lee, 2011, for thoughts on how and why such activities might help students.) A few studies of relatively modest scale (but not trivial--100-200 kids) indicated that Tools of the Mind has the intended effect (Barnett et al, 2008; Diamond et al, 2007). But now some much larger scale followup studies (800-2000 kids) have yielded discouraging results. These studies were reported at a symposium this Spring at a meeting of the Society for Research on Educational Effectiveness. (You can download a pdf summary here.) Sarah Sparks covered this story for Ed Week when it happened in March, but it otherwise seemed to attract little notice. Researchers at the symposium reported the results of three studies. Tools of the Mind did not have an impact in any of the three. What should we make of these discouraging results? It's too early to conclude that Tools of the Mind simply doesn't work as intended. It could be that there are as-yet unidentified differences among kids such that it's effective for some but not others. It may also be that the curriculum is more difficult to implement correctly than would first appear to be the case. Perhaps the teachers in the initial studies had more thorough training. Whatever the explanation, the results are not cheering. It looked like we might have been on to a big-impact intervention that everyone could get behind. Now we are left with the dispiriting conclusion "More study is needed." Barnett, W., Jung, K., Yarosz, D., Thomas, J., Hornbeck, A., Stechuk, R., & Burns, S.(2008). Educational effects of the Tools of the Mind curriculum: A randomized trial. Early Childhood Research Quarterly, 23, 299–313. Bedrova, E. & Leong, D. (2007) Tools of the Mind: The Bygotskian appraoch to early childhood education. Second edition. New York: Merrill. Diamond, A. & Lee, K. (2011). Interventions shown to aid executive function development in children 4-12 years old. Science, 333, 959-964. Diamond, A., Barnett, W. S., Thomas, J., & Munro, S. (2007). Preschool program improves cognitive control. Science, 318, 1387-1388. Steven Levitt, of Freakonomics fame, has unwittingly provided an example of how science applied to education can go wrong.

On his blog, Levitt cites a study he and three colleagues published (as an NBER working paper). The researchers rewarded kids for trying hard on an exam. As Levitt notes, the goal of previous research has been to get kids to learn more. That wasn't the goal here. It was simply to get kids to try harder on the exam itself, to really show everything that they knew. Among the findings: (1) it worked. Offering kids a payoff for good performance prompted better test scores; (2) it was more effective if, instead of offering a payoff for good performance, researchers gave them the payoff straight away and threatened to take it away if the student didn't get a good score (an instance of a well-known and robust effect called loss aversion); (3) children prefer different rewards at different ages. As Levitt puts it "With young kids, it is a lot cheaper to bribe them with trinkets like trophies and whoopee cushions, but cash is the only thing that works for the older students." There are a lot of issues one could take up here, but I want to focus on Levitt's surprise that people don't like this plan. He writes "It is remarkable how offended people get when you pay students for doing well – so many negative emails and comments." Levitt's surprise gets at a central issue in the application of science to education. Scientists are in the business of describing (and thereby enabling predictions of) the Natural world. One such set of phenomenona concerns when students put forth effort and when they don't. Education is a not a scientific enterprise. The purpose is not to describe the world, but to change it, to make it more similar to some ideal that we envision. (I wrote about this distinction at some length in my new book. I also discussed on this brief video.) Thus science is ideally value-neutral. Yes, scientists seldom live up to that ideal; they have a point of view that shapes how they interpret data, generate theories, etc., but neutrality is an agreed-upon goal, and lack of neutrality is a valid criticism of how someone does science. Education, in contrast, must entail values, because it entails selecting goals. We want to change the world--we want kids to learn things--facts, skills, values. Well, which ones? There's no better or worse answer to this question from a scientific point of view. A scientist may know something useful to educators and policymakers, once the educational goal is defined; i.e., the scientist offers information about the Natural world that can make it easier to move towards the stated goal. (For example, if the goal is that kids be able to count to 100 and to understand numbers by the end of preschool, the scientist may offer insights into how children come to understand cardinality.) What scientists cannot do is use science to evaluate the wisdom of stated goals. And now we come to people's hostility to Levitt's idea of rewards for academic work. I'm guessing most people don't like the idea of rewards for the same reason I don't. I want my kids to see learning as a process that brings its own reward. I want my kids to see effort as a reflection of their character, to believe that they should give their all to any task that is their responsibility, even if the task doesn't interest them. There is, of course, a large, well-known research literature on the effect of extrinsic rewards on motivation. Readers of this blog are probably already familiar with it--if so, skip the next paragraph. The problem is one of attribution. When we observe other people act, we speculate on their motives. If I see two people gardening--one paid and the other unpaid--I'm likely to assume that one gardens because he's paid and the other because he enjoys gardening. It turns out that we make these attributions about our own behavior as well. If my child tries her hardest on a test she's likely to think "I'm the kind of kid who always does her best, even on tasks she don't care for." If you pay her for her performance she'll think "I'm the kind of kid who tries hard when she's paid." This research began in the 1970's and has held up very well. Kids work harder for rewards. . . until the rewards stop. Then they engage in the task even less than they did before the rewards started. I summarized some of this work here. In the technical paper, Levitt cites some of the reviews of this research but downplays the threat, pointing out that when motivation is low to start with, there's not much danger of rewards lowering it further. That's true, and I've made a close argument: cash rewards might be used as a last-ditch effort for a child who has largely given up on school. But that would dictate using rewards only with kids who were not motivated to start, not in a blanket fashion as was done in Levitt's study. And I can't see concluding that elementary school kids were so unmotivated that they were otherwise impossible to reach. In addressing the threat to student motivation with research, Levitt is approaching the issue in the right way (even if I think he's incorrect in how he does so.) But on the blog (in contrast to the technical paper), Levitt addresses the threat in the wrong way. He skips the scientific argument and simply belittles the idea that parents might object to someone paying their child for academic work. He writes: Perhaps the critics are right and the reason I’m so messed up is that my parents paid me $25 for every A that I got in junior high and high school. One thing is certain: since my only sources of income were those grade-related bribes and the money I could win off my friends playing poker, I tried a lot harder in high school than I would have without the cash incentives. Many middle-class families pay kids for grades, so why is it so controversial for other people to pay them? I think Levitt is getting "so many negative emails and comments" because he's got scientific data to serve one type of goal (get kids to try hard on exams) the application of which conflicts with another goal (encourage kids to see academic work as its own reward). So he scoffs at the latter. I see this blog entry as an object lesson for scientists. We offer something valuable--information about the Natural world--but we hold no status in deciding what to do with that information (i.e., setting goals). In my opinion Levitt's blog entry shows he has a tin ear for the possibility that others do not share his goals for education. If scientitists are oblivious to or dismissive of those goals, they can expect not just angry emails, they can expect to be ignored. Important study on the impact of education on women's attitudes and beliefs:

Mocan & Cannonier (2012) took advantage of a naturally-occurring "experiment" in Sierra Leone. The country suffered a devastating, decade-long civil war during the 1990s, which destroyed much of the country's infrastructure, including schools. In 2001, Sierra Leone instituted a program offering free primary education; attendance was compulsory. This policy provided significant opportunities for girls who were young enough for primary school, but none for older children. Further, resources to implement the program were not equivalent in all districts of the country. The authors used these quirky reasons that the program was more or less accessible to compare girls who participated and those who did not. (Researchers controlled for other variables such as religion, ethnic background, residence in an urban area, and wealth.) The outcome of interest was empowerment, which the researchers defined as "having the knowledge along with the power and the strength to make the right decisions regarding one's own well-being." The outcome measures came from a 2008 study (the Sierra Leone Demographic and Health Survey) which summarized interviews with over 10,000 individuals. The findings: Better educated women were more likely to believe

Better educated women were more likely to endorse these behaviors:

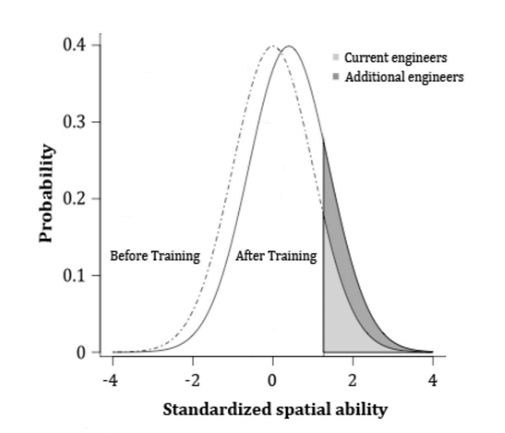

One of the oddest findings in these data is also one of the most important to understanding the changes in attitudes: they are not due to changes in literacy. The researchers drew that conclusion because an increase in education had no impact on literacy, likely because the quality of instruction in schools was very low. The best guess is that the impact of schooling on attitudes was through social avenues. Mocan, N. H. & Cannonier, C. (2012) Empowering women through education: Evidence from Sierra Leone. NBER working paper 18016. There is a great deal of attention paid to and controversy about, the promise of training working memory to improve academic skills, a topic I wrote about here. But working memory is not the only cognitive process that might be a candidate for training. Spatial skills are a good predictor of success in science, mathematics, and engineering. Now on the basis of a new meta-analysis (Uttal, Meadow, Tipton, Hand, Alden, Warren & Newcombe, in press) researchers claim that spatial skills are eminently trainable. In fact they claim a quite respectable average effect size of 0.47 (Hedge's g) after training (that's across 217 studies). Training tasks across these many studies included things like visualizing 2D and 3D objects in a CAD program, acrobatic sports training, and learning to use a laparascope (an angled device used by surgeons). Outcome measures were equally varied, and included standard psychometric measures (like a paper-folding test), tests that demanded imagining oneself in a landscape, and tests that required mentally rotating objects. Even more impressive: 1) researchers found robust transfer to new tasks 2) researchers found little, if any effect of delay between training and test--the skills don't seem to fade with time, at least for several weeks. (Only four studies included delays of greater than one month.) This is a long, complex analysis and I won't try to do it justice in a brief blog post. But the marquee finding is big news. What we'd love to see is an intervention that is relatively brief, not terribly difficult to implement, reliably leads to improvement, and transfers to new academic tasks. That's a tall order, but spatial skills may fill all the requirements. The figure below (from the paper) is a conjecture--if spatial training were widely implemented, and once scaled up we got the average improvement we see in these studies, how many more people could be trained as engineers? The paper is not publicly available, but there is a nice summary here from the collaborative laboratory responsible for the work. I also recommend this excellent article from American Educator on the relationship of spatial thinking to math and science, with suggestions for parents and teachers.

Uttal, D. H., Meadow, N. G., Tipton, E., Hand, L. L., Alden, A. R., Warren, C., & Newcombe, N.S. (2012, June 4). The Malleability of Spatial Skills: A Meta-Analysis of Training Studies. Psychological Bulletin. Advance online publication. doi: 10.1037/a0028446 Newcombe, N. S. (2010) Picture this: Increasing math and science learning by improving spatial thinking. American Educator, Summer, 29-35, 43. Should kids be allowed to chew gum in class? If a student said "but it helps me concentrate. . ." should we be convinced? If it provides a boost, it's short-lived. It's pretty well established that a burst of glucose provides a brief cognitive boost (see review here), so the question is whether chewing gum in particular provides any edge over and above that, or whether a benefit would be observed when chewing sugar-free gum. One study (Wilkinson et al., 2002) compared gum-chewing to no-chewing (and "sham chewing" in which subjects were to pretend to chew gum, which seems awkward). Subjects performed about a dozen tasks, including some of vigilance (i.e., sustaining attention), short-term and long term memory.

Researchers reported some positive effect of gum-chewing for four of the tests. It's a little hard to tell from the brief write-up, but it appears that the investigators didn't correct their statistics for the multiple tests. This throw-everything-at-the-wall-and-see-what-sticks may be a characteristic of this research. Another study (Smith, 2010) took that same approach and concluded that there were some positive effects of gum chewing for some of the tasks, especially for feelings of alertness. (This study did not use sugar-free gum so it's hard to tell whether the effect is due to the gum or the glucose.) A more recent study (Kozlov, Hughes & Jones, 2012) using a more standard short-term memory paradigm, found no benefit for gum chewing. What are we to make of this grab-bag of results? (And please note this blog does not offer an exhaustive review.) A recent paper (Onyper, Carr, Farrar & Floyd, 2011) offers a plausible resolution. They suggest that the act of mastication offers a brief--perhaps ten or twenty minute--boost to cognitive function due to increased arousal. So we might see benefit (or not) to gum chewing depending on the timing of the chewing relative to the timing of cognitive tasks. The upshot: teachers might allow or disallow gum chewing in their classrooms for a variety of reasons. There is not much evidence to allow it for a significant cognitive advantage. EDIT: Someone emailed to ask if kids with ADHD benefit. The one study I know of reported a cost to vigilance with gum-chewing for kids with ADHD Kozlov, M. D., Hughs, R. W. & Jones, D. M. (2012). Gummed-up memory: chewing gum impairs short-term recall. Quarterly Journal of Experimental Psychology, 65, 501-513. Onyper, S. V., Carr, T. L, Farrar, J. S. & Floyd, B. R. (2011). Cognitive advantages of chewing gum. Now you see them now you don't. Appetite, 57, 321-328. Smith, A. (2010). Effects of chewing gum on cognitive function, mood and physiology in stressed an unstressed volunteers. Nutritional Neuroscience, 13, 7-16. Wilkinson, L., Scholey, A., & Wesnes, K. (2002). Chewing gum selectively improves aspects of memory in healthy volunteers. Appetite, 38, 235-236. |

PurposeThe goal of this blog is to provide pointers to scientific findings that are applicable to education that I think ought to receive more attention. Archives

January 2024

Categories

All

|

RSS Feed

RSS Feed