But lazy teachers are not the only cause. Education policy makers are also to blame, according to Weil. She writes that “valorizing self-regulation shifts the focus away from an impersonal, overtaxed, and underfunded school system and places the burden for overcoming those shortcomings on its students.”

I can see why Weil and her husband were angry when their daughter’s teacher suggested occupational therapy simply because the child’s behavior was an inconvenience to him. But I don’t take that to mean that there is necessarily a widespread problem in the psyche of American teachers. I take that to mean that their daughter’s teacher was acting like a selfish bastard.

The problem with stories, of course, is that there are stories to support nearly anything. For every story a parent could tell about a teacher diagnosing typical behavior as a problem, a teacher could tell a story about a child who really could do with some therapeutic help, and whose parents were oblivious to that fact.

What about evidence beyond stories?

Weil cites a study by Duncan et al (2007) that analyzed six large data sets and found social-emotional skills were poor predictors of later success.

She also points out that creativity among American school kids dropped between 1984 and 2008 (as measured by the Torrance Test of Creative Thinking) and she notes “Not coincidentally, that decrease happened as schools were becoming obsessed with self-regulation.”

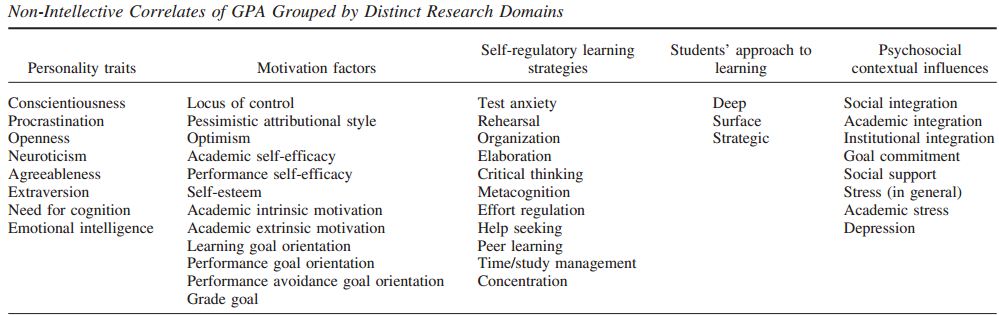

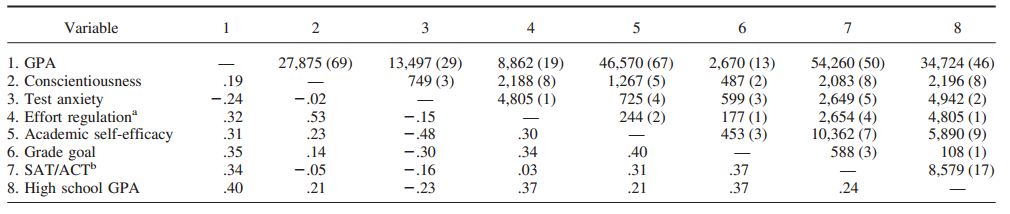

There is a problem here. Weil uses different terms interchangeably: self-regulation, grit, social-emotional skills. They are not same thing. Self-regulation (most simply put) is the ability to hold back an impulse when you think that that the impulse will not serve other interests. (The marshmallow study would fit here.) Grit refers to dedication to a long-term goal, one that might take years to achieve, like winning a spelling bee or learning to play the piano proficiently. Hence, you can have lots of self-regulation but not be very gritty. Social emotional skills might have self-regulation as a component, but it refers to a broader complex of skills in interacting with others.

These are not niggling academic distinctions. Weil is right that some research indicates a link between socioemotional skills and desirable outcomes, some doesn’t. But there is quite a lot of research showing associations between self-control and positive outcomes for kids including academic outcomes, getting along with peers, parents, and teachers, and the avoidance of bad teen outcomes (early unwanted pregnancy, problems with drugs and alcohol, et al.). I reviewed those studies here. There is another literature showing associations of grit with positive outcomes (e.g., Duckworth et al, 2007).

Of course, those positive outcomes may carry a cost. We may be getting better test scores (and fewer drug and alcohol problems) but losing kids’ personalities. Weil calls on the reader’s schema of a “wild child,” that is, an irrepressible imp who may sometimes be exasperating, but whose very lack of self-regulation is the source of her creativity and personality.

So there’s a case to be made that American society is going too far in emphasizing self-regulation. But the way to make it is not to suggest that the natural consequence of this emphasis is the crushing of children’s spirits because self-regulation is the same thing as no exuberance. The way to make the case is to show us that we’re overdoing self-regulation. Kids feel burdened, anxious, worried about their behavior.

Weil doesn’t have data that would bear on this point. I don’t either. But my perspective definitely differs from hers. When I visit classrooms or wander the aisles of Target, I do not feel that American kids are over-burdened by self-regulation.

As for the decline in creativity from 1984 and 2008 being linked to an increased focus on self-regulation…I have to disagree with Weil’s suggestion that it’s not a coincidence (setting aside the adequacy of the creativity measure). I think it might very well be a coincidence. Note that scores on the mathematics portion of the long-term NAEP increased during the same period. Why not suggest that kids improvement in a rigid, formulaic understanding of math inhibited their creativity?

Can we talk about important education issues without hyperbole?

References

Duckworth, A. L., Peterson, C., Matthews, M. D., & Kelly, D. R. (2007). Grit: perseverance and passion for long-term goals. Journal of personality and social psychology, 92(6), 1087.

Duncan, G. J., Dowsett, C. J., Claessens, A., Magnuson, K., Huston, A. C., Klebanov, P., ... & Japel, C. (2007). School readiness and later achievement. Developmental psychology, 43(6), 1428.

RSS Feed

RSS Feed